JobMon - Job Performance Metrics¶

With JobMon we offer a web based performance service. It visualizes selected performance metrics collected on cluster nodes during runtime of a users job. Metrics are stored for at least 4 weeks, so that performance changes over time can be tracked.

Benchmarks¶

In this documentation we will demonstrate JobMon use cases by benchmarks from three different categories:

Compute Bound¶

Performance is limited by the speed of the CPU, this typically means it is limited by the number of floating point operations. Benchmarks from this category include:

-

Benchmark DGEMM performs a matrix matrix multiplication C = A · B. For n dimensional square matrixes computation requires O(n3) compute operations but only O(n2) memory operations. There are implementations for CPU and GPU available for this benchmark.

Memory bound¶

Performance is limited by the speed of the main memory subsystem. This can be caused by memory bandwidth saturation, or memory access latency. Benchmarks from this category include:

-

Benchmark Stream performs four sub-benchmarks: vector copy c = a, vector scale b = α · c, vector add c = a + b and vector triad a = b + α · c. For n dimensional vectors computation requires O(n) memory operations but only O(n) compute operations. There are implementations for CPU and GPU available for this benchmark.

-

High Performance Conjugate Gradients (HPCG)

HPCG performs the conjugate gradients method with a sparse matrix. There are implementations for CPU and GPU available for this benchmark.

Communication bound¶

Performance is limited by the node to node communication network. This can be caused by interconnect bandwidth saturation, or communication package latency. Benchmarks from this category include:

-

OMB performs point to point communication with messages of increasing size to measure bandwidth and latency. There are implementations for CPU and GPU available for this benchmark.

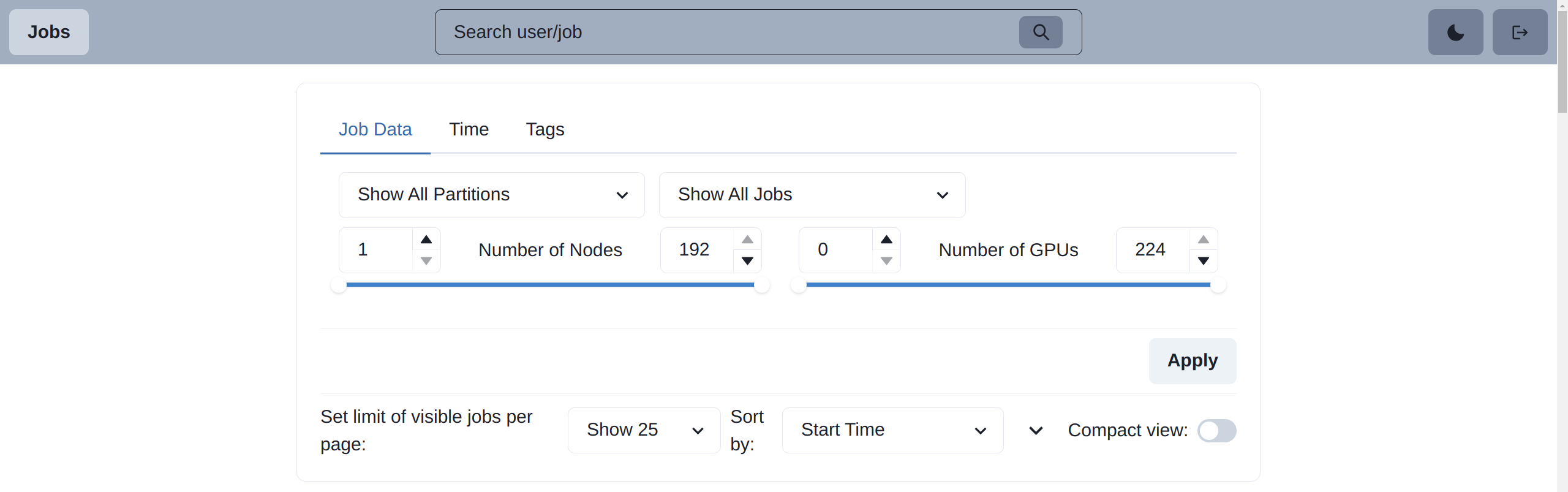

Jobs page¶

After logging in to the JobMon service, you will be automatically redirected to the Jobs page.

This page presents an overview of the individual batch jobs that have been executed on the HoreKa cluster. These Jobs can be filter by:

- the partition the job was running

- the number of nodes or number of GPUs used by the job

- still running or already finished jobs

- the time the job was running (e.g. jobs of last week)

- tags assigned to the job (e.g. tags: "without optimization", "optimization A", "optimization B", ...)

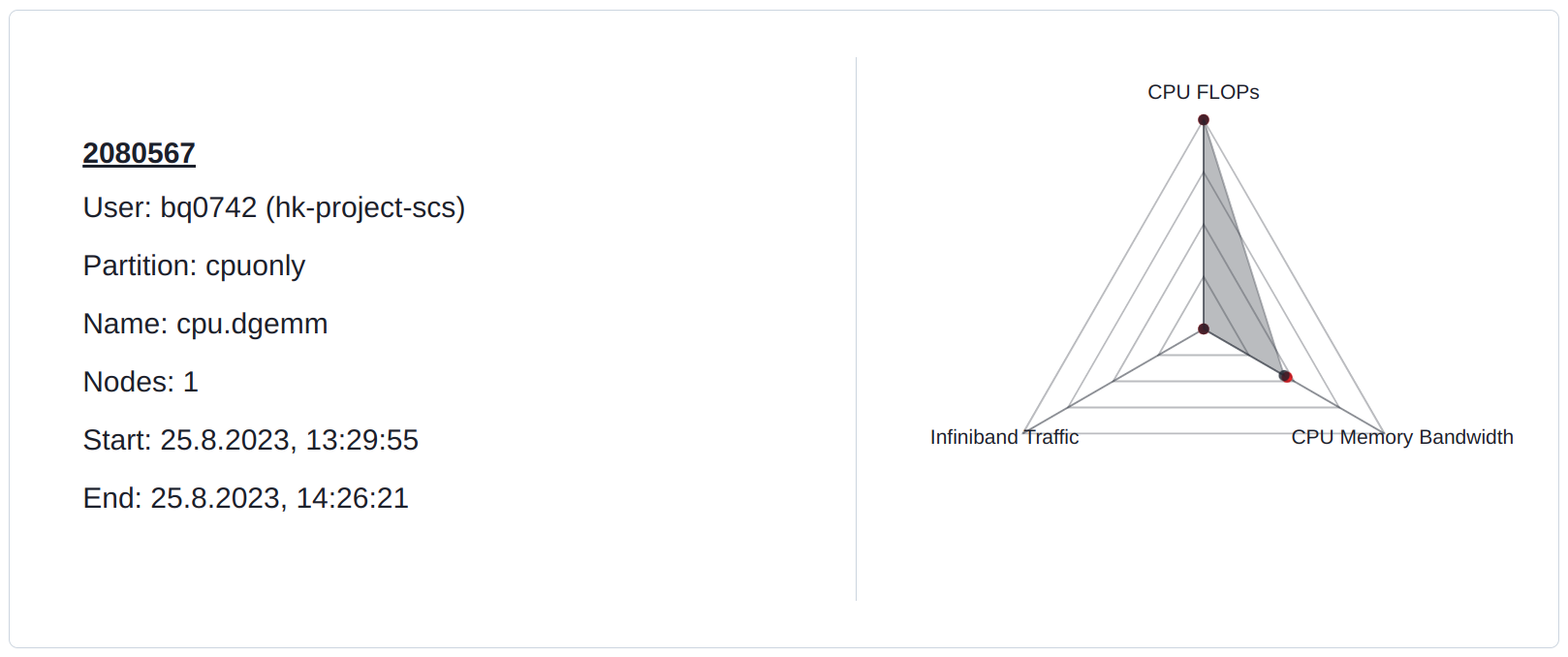

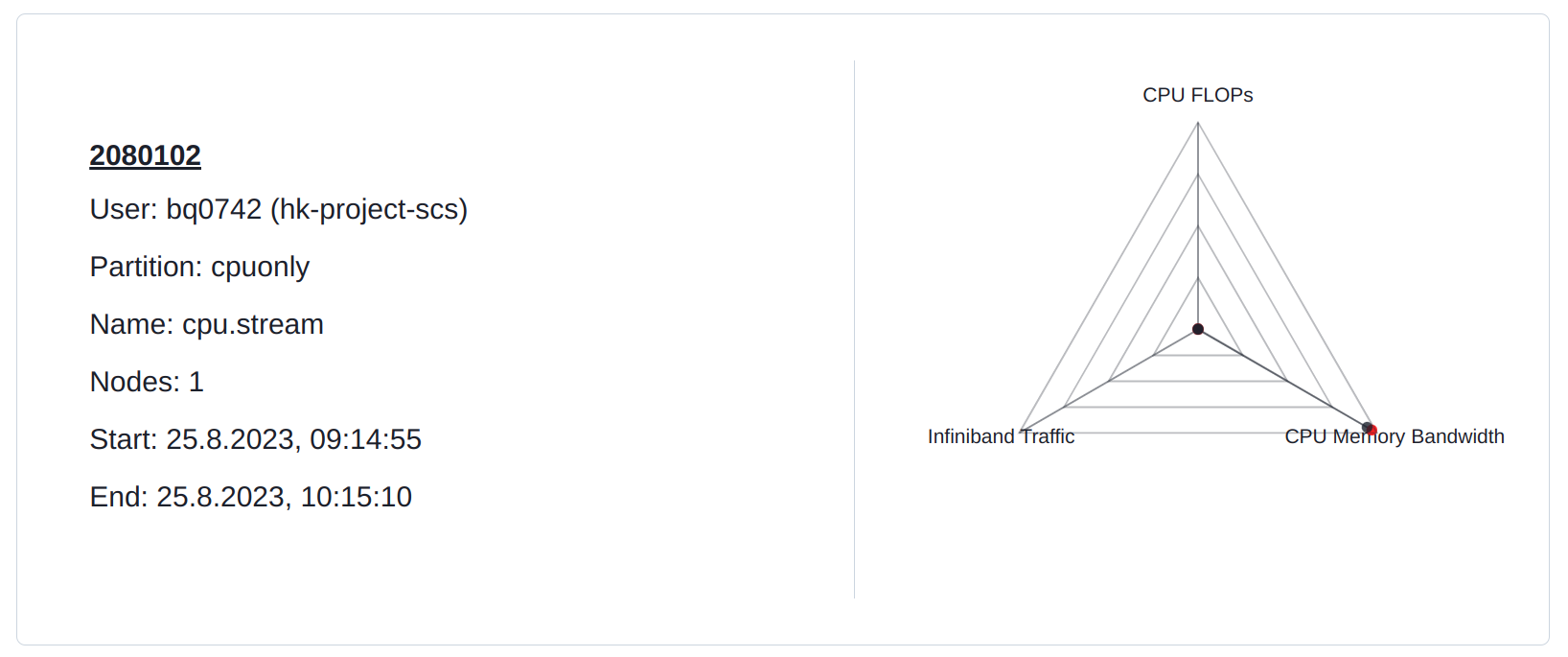

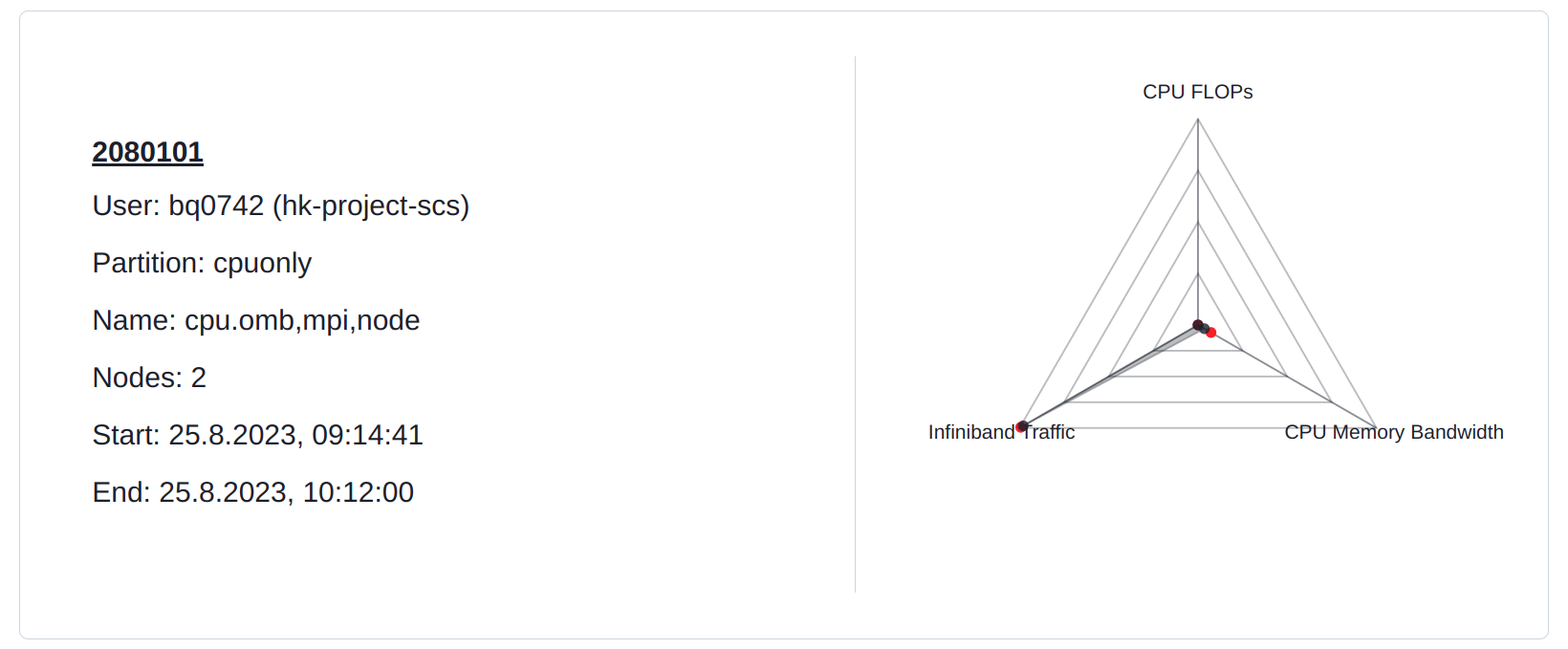

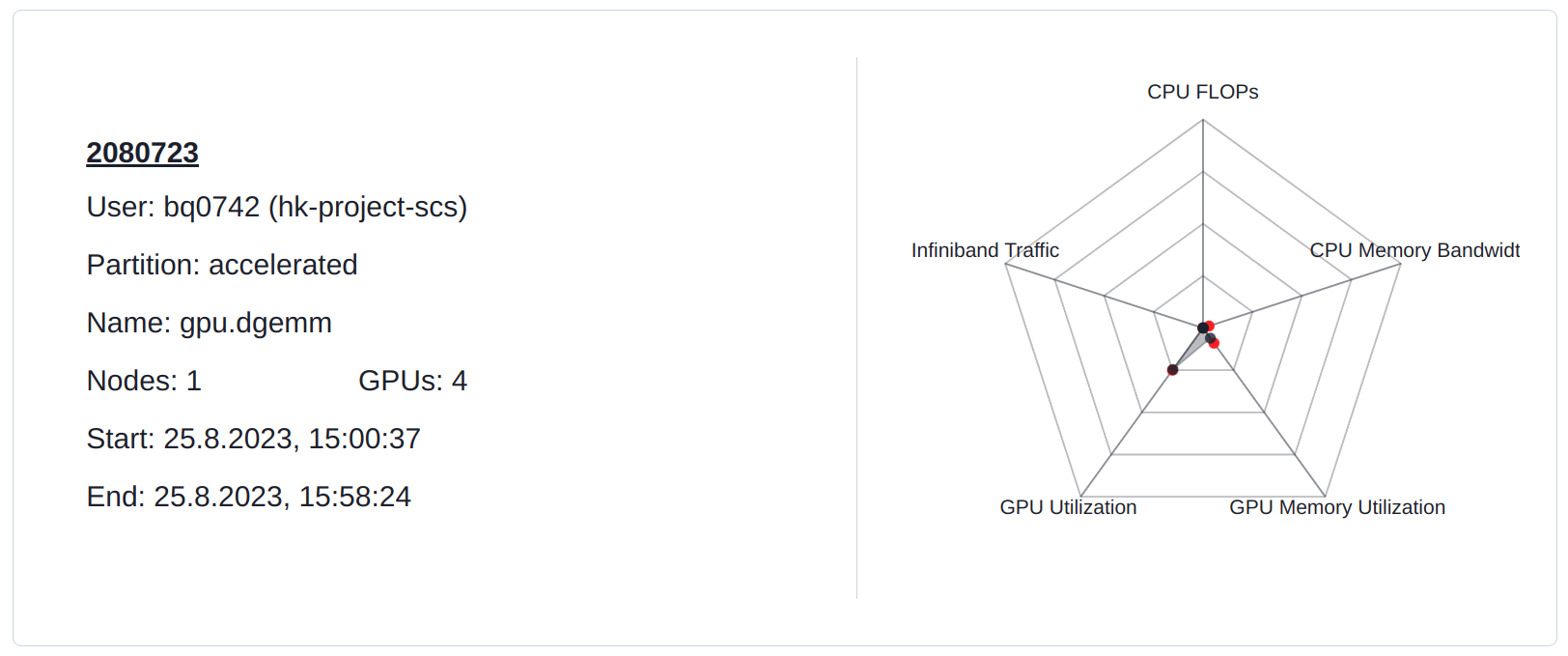

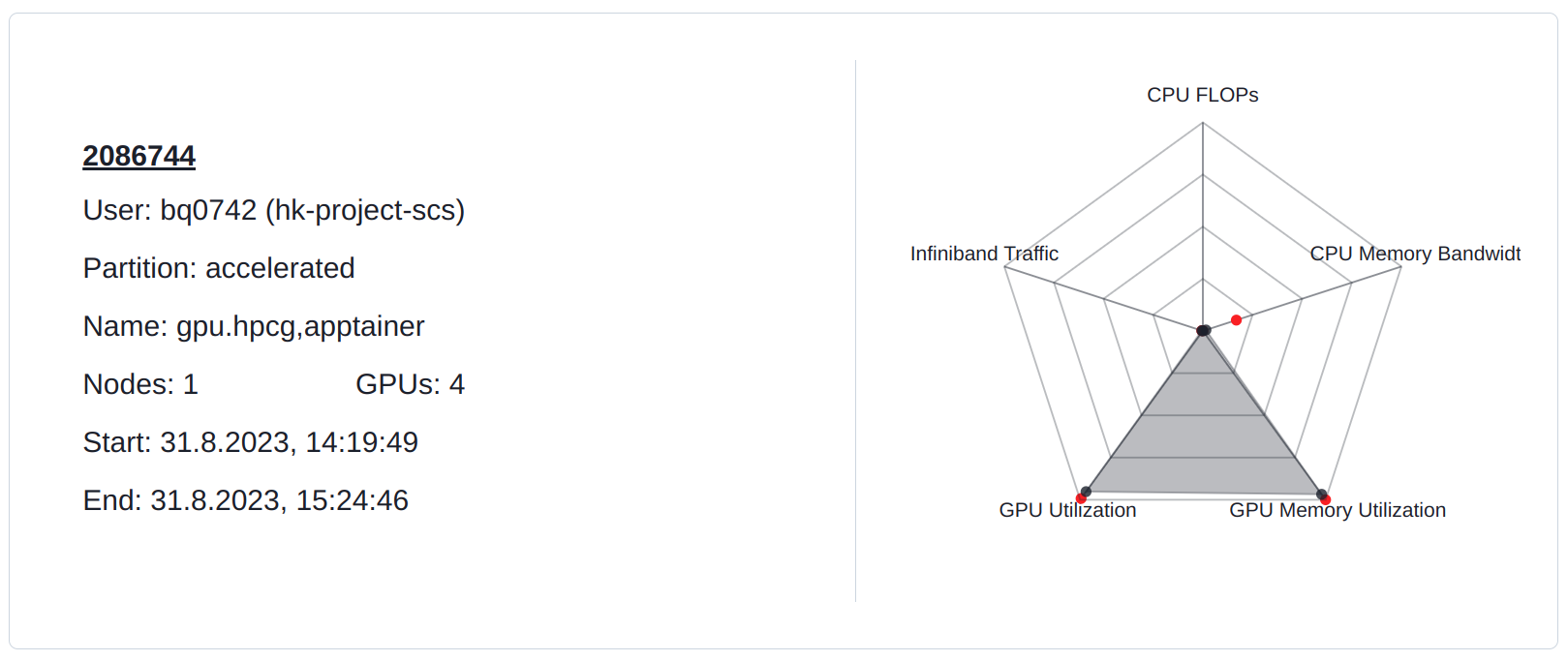

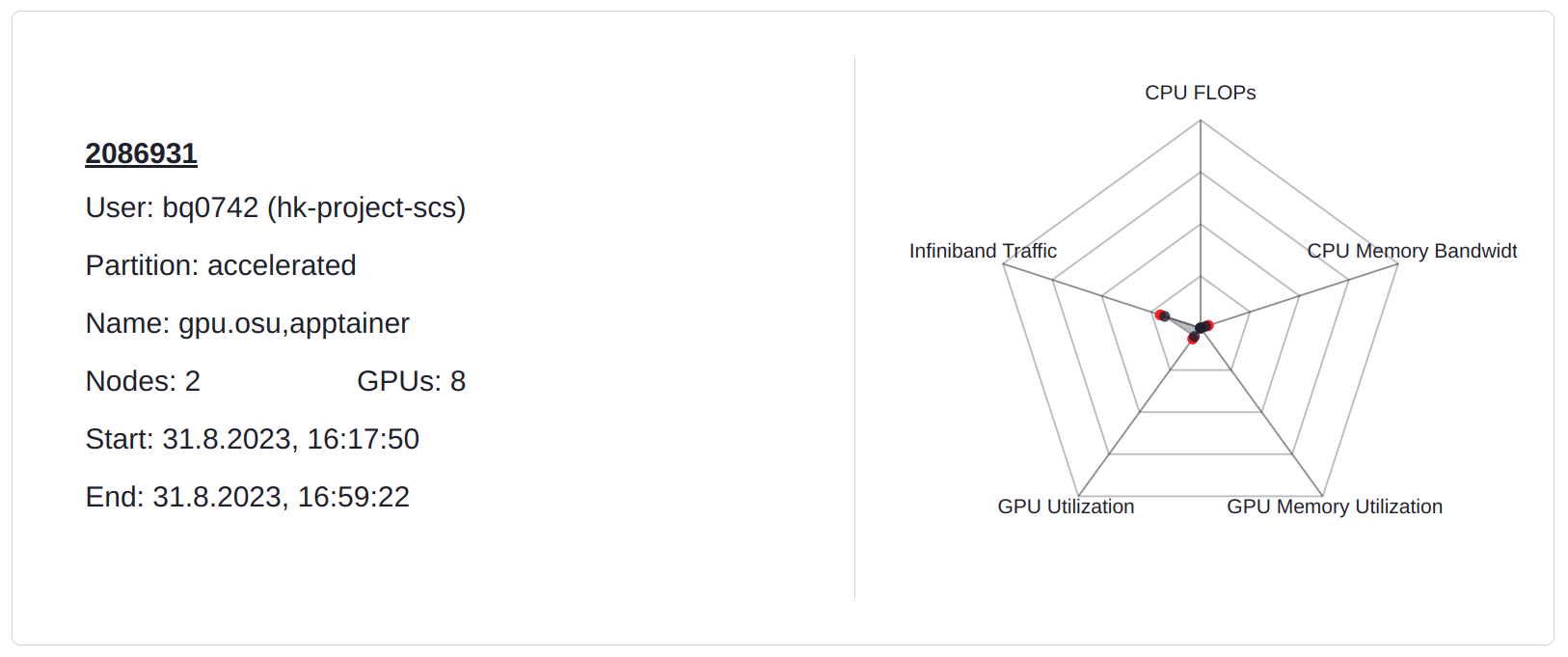

The Jobs page includes for each job a so called spider plot. This plot very easily shows the performance limitations of this job and allows categorization as memory bound, compute bound, or communication bound. For this purpose, the plot shows the average and maximum values for the metrics:

- CPU floating point operations per second

- CPU memory bandwidth

- GPU utilization

- GPU memory utilization

- InfiniBand bandwidth

Examples: Spider Plot

DGEMM is capable of using the entire available floating point power of the node. This also uses a significant portion of the memory bandwidth.

Stream saturates the entire memory bandwidth. All other resources are under-utilized.

OSU Micro-Benchmarks saturates the entire InfiniBand bandwidth. Some memory bandwidth utilization can also be seen as messages are transferred from memory to memory.

The DGEMM implementation uses only a single GPU. Therefore, only a quarter of the available floating point performance of the GPUs is utilized. This is reflected in the use of memory bandwidth as well.

HPCG runs distributed on all GPUs of the node. HPCG saturates the entire memory bandwidth of these GPUs. The compute units are also well utilized.

InfiniBand communication between GPUs is not as performant as between CPUs. Therefore, OSU Micro-Benchmarks cannot utilize the entire available InfiniBand bandwidth.

Per job page¶

Configuration options¶

- Select subset of nodes: Show only nodes of interest

- Select time range: Zoom into time ranges of interest

- Select subset of metrics to focus on metrics of interest

-

Set Tag

Tags are a handy tool to mark jobs during different optimization steps (e.g. tags: "without optimization", "optimization A", "optimization B", ...) or jobs from different workflows (e.g. tags: "workflow A", "workflow B"). Filtering for tags on the jobs page allows easily finding all jobs with the same tag.

-

Toggle for Automatic Scaling:

Select diagram y-axis upper and lower limits depending on the measured metric values

-

Toggle for Changepoints

Change point detection tries to identify times where performance metric behavior changes. If changes are identified, they are displayed as a vertical line in the diagram.

Examples: Changepoints

OMB performs communication with messages of increasing size. The performance changes caused by increasing message size can only be seen as steps in the graph.

OMB performs communication with messages of increasing size. Some of time points with changing performance caused by increasing message size are detected by the changepoint algorithm and marked by vertical lines

-

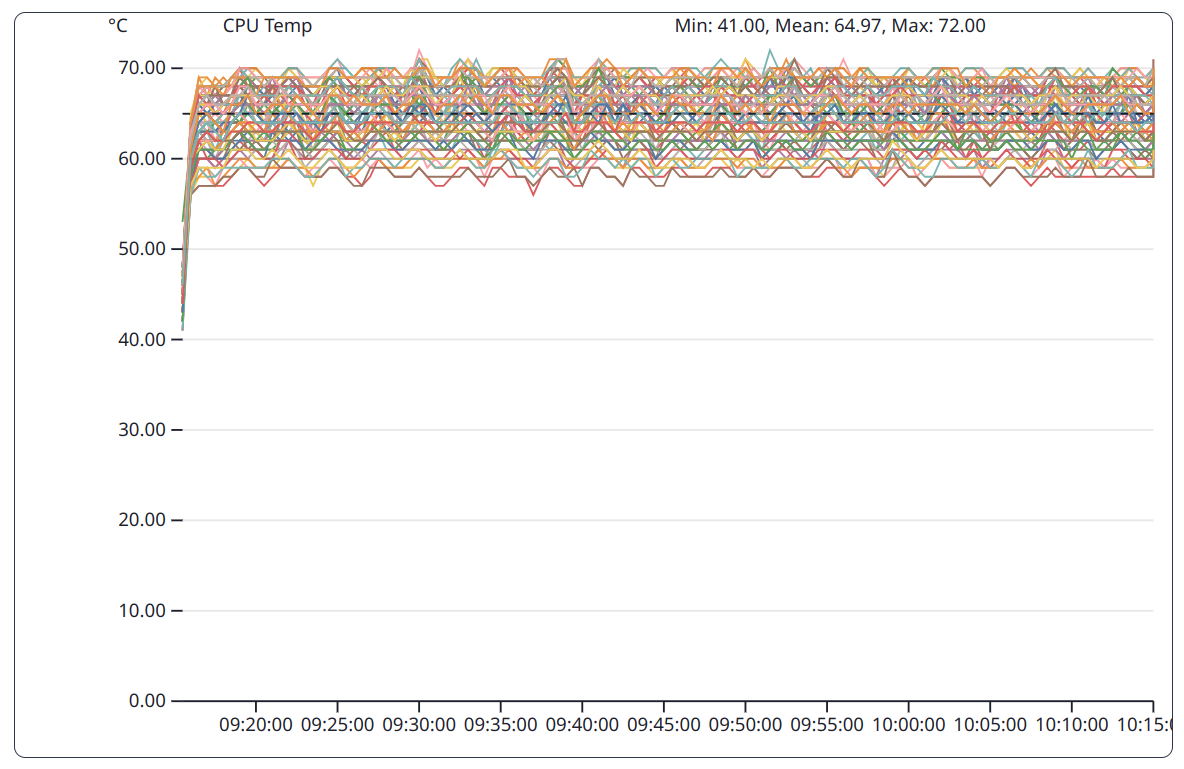

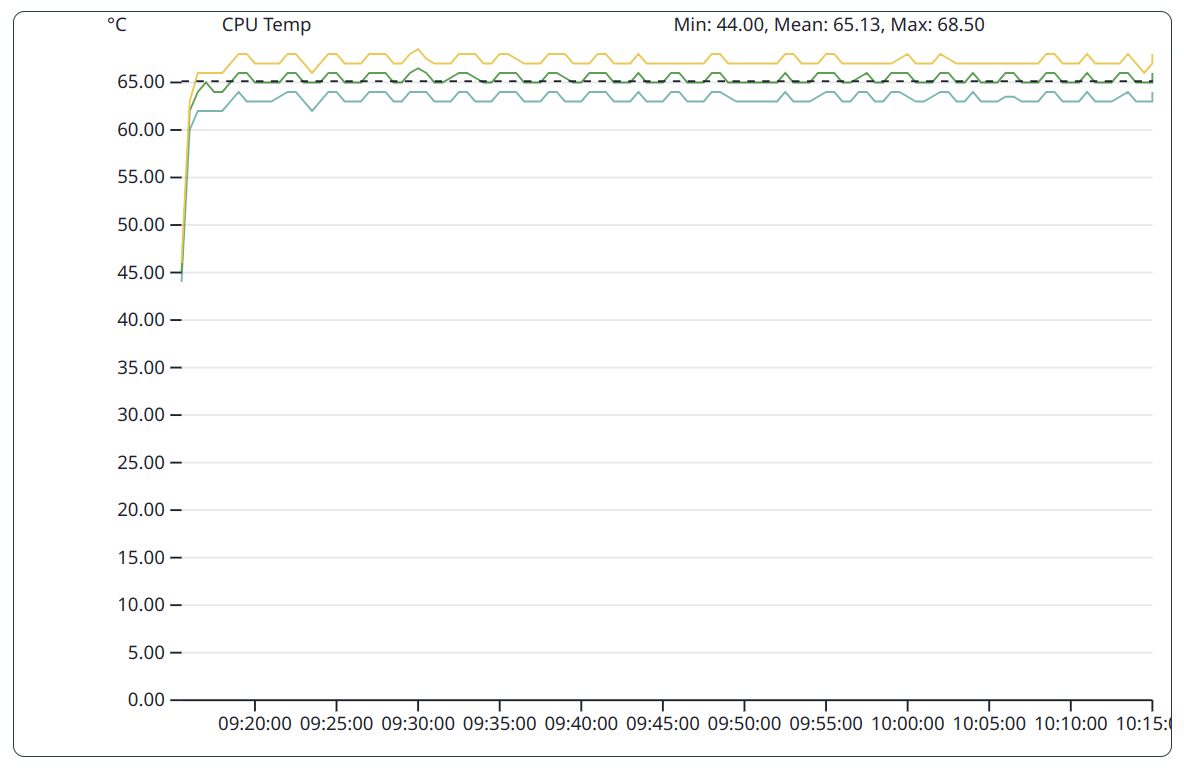

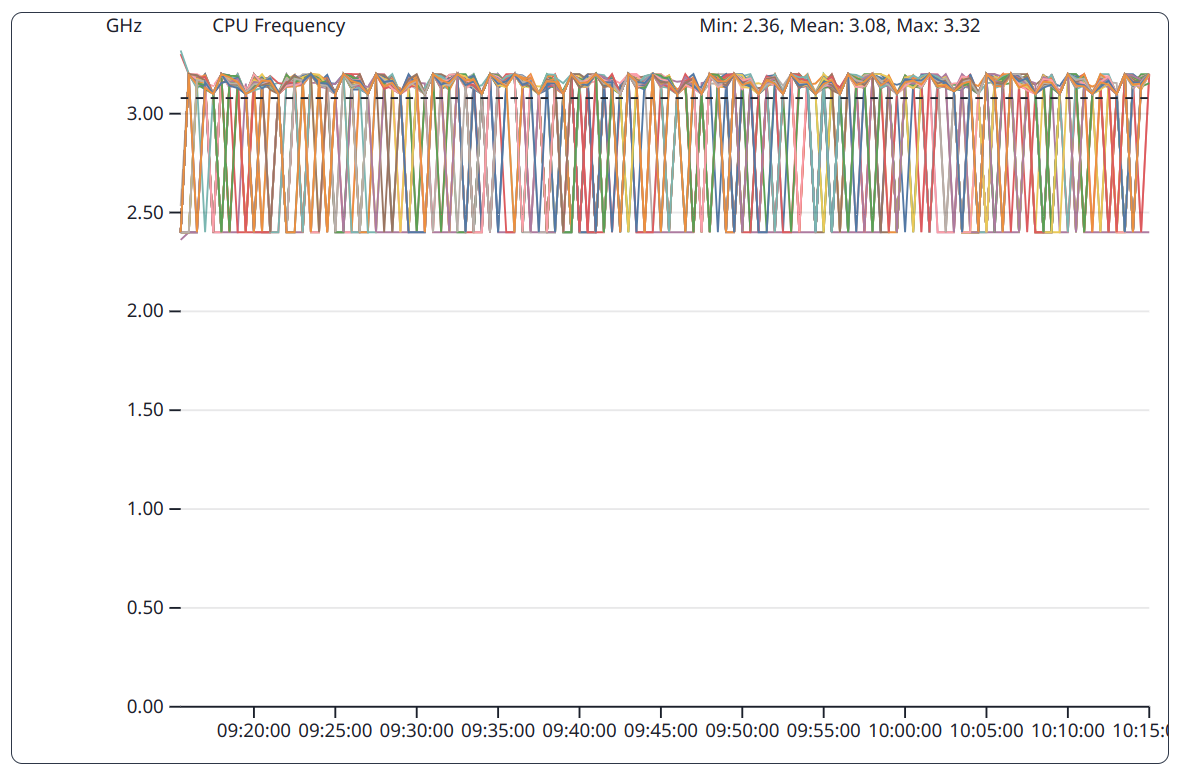

Toggle for Quantile view

- Quantile view is a useful feature to improve clarity when too many graphs are displayed in one diagram.

- Information is condensed into only three graphs (25% / 50% / 75% Quantile = Quartiles)

- The 25% Quantile is the graph for which 25% of the measured values are below the graph

- The 50% Quantile is also known as median

- The difference between upper and lower Quantile is a measure for the spread of the metrics

Examples: Quantile View

The CPU temperatures are collected per hardware thread, therefore the diagram appears very cluttered

The Quantile View shows the distribution of the CPU temperatures much clearer

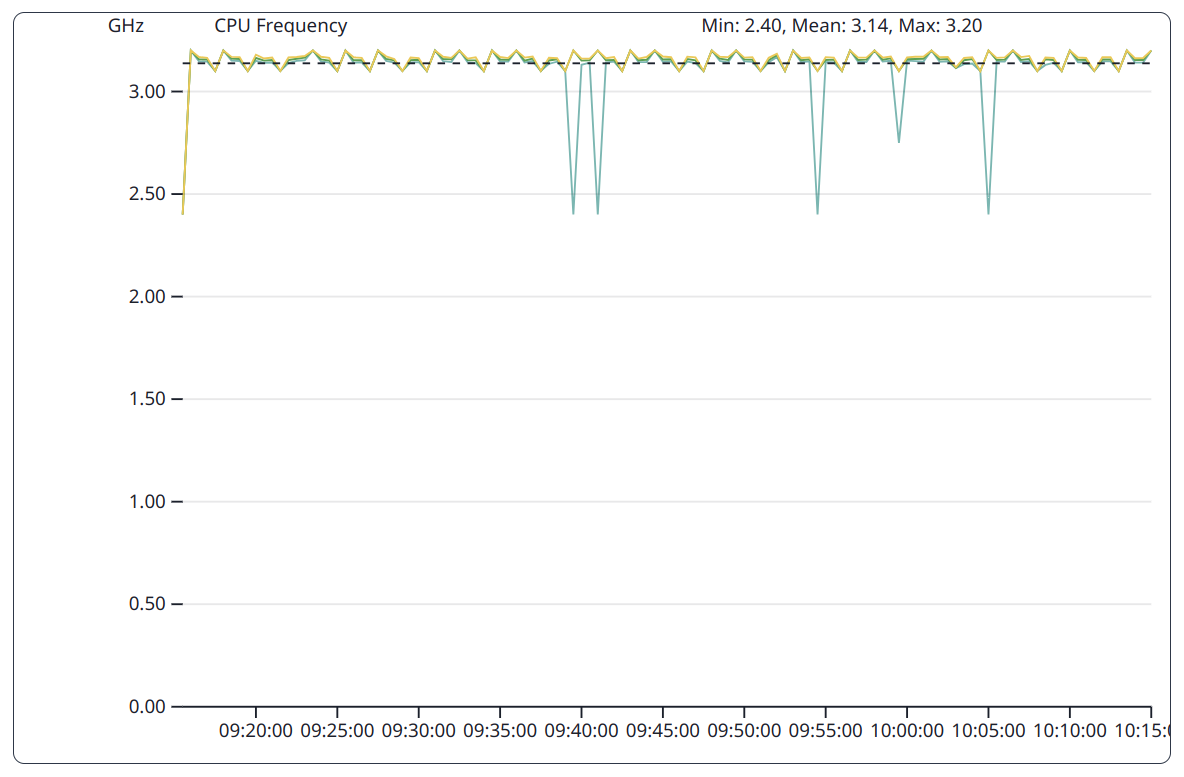

The CPU frequencies are collected per CPU core, therefore the diagram appears very cluttered

The Quantile View shows the distribution of the CPU frequencies much clearer

Performance categories¶

Metrics are grouped into different performance categories

Energy¶

This category offers diagrams for:

- CPU power consumption of DRAM channels and the package

- GPU power consumption

- Server system power consumption

Examples: Category Energy

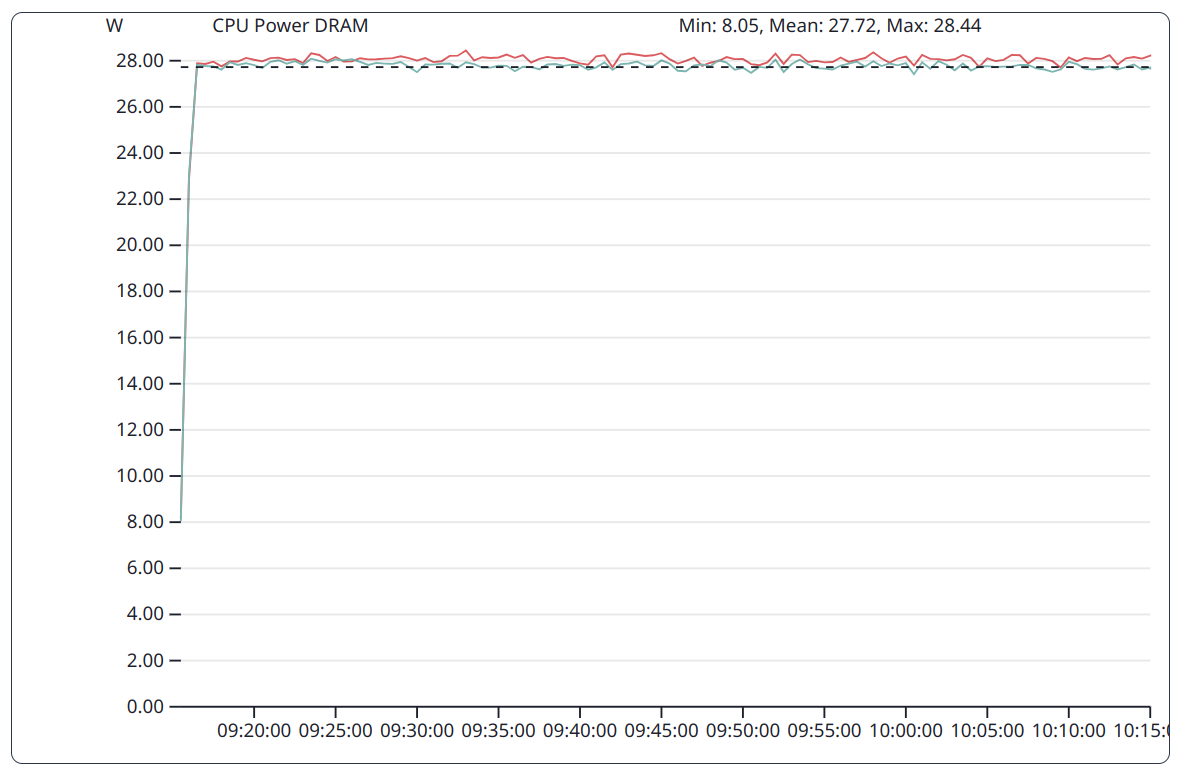

Stream as a memory bound benchmark put constantly high pressure on the DRAM subsystem. This is reflected by a constantly high energy consumption of this subsystem.

DGEMM as a compute bound benchmark does put less pressure on the DRAM subsystem. This is reflected in the varying power consumption of this subsystem over time.

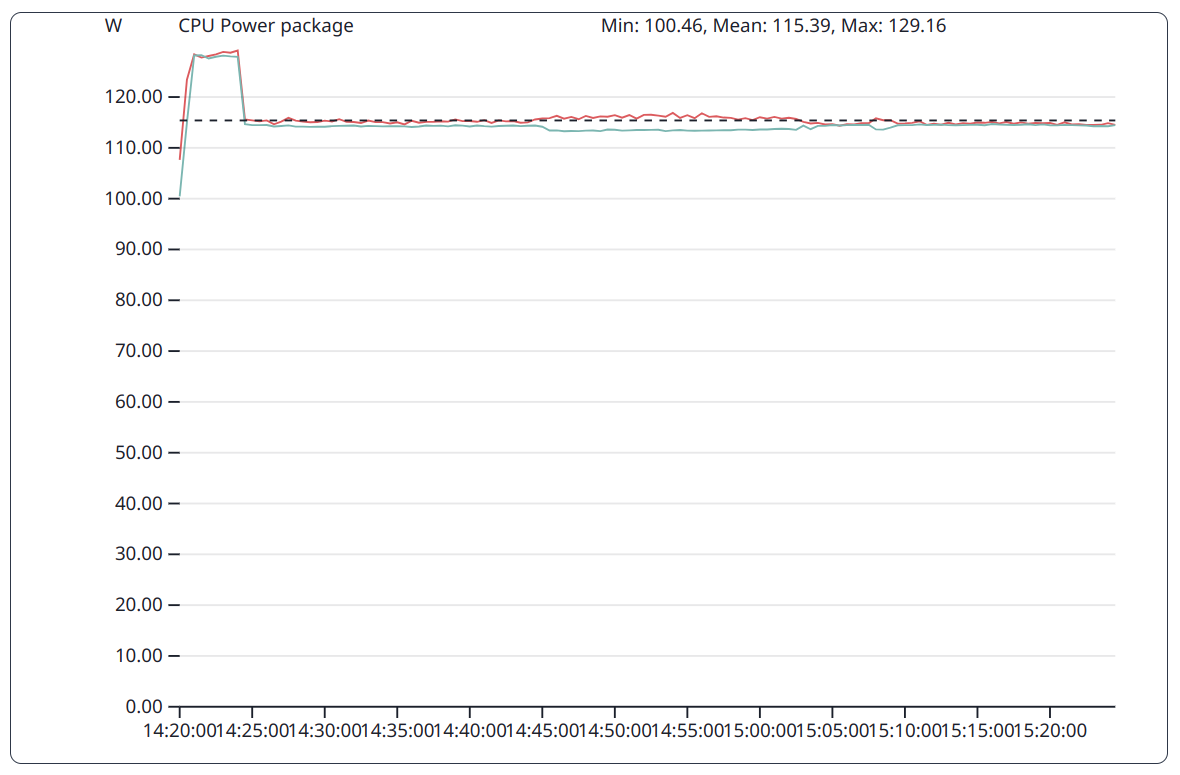

The GPU implementation of the HPCG benchmark has a preparation phase executed on the CPU and a computation phase executed on the GPU. During the first phase the power consumption of the CPU package is therefore higher than in the subsequent phase.

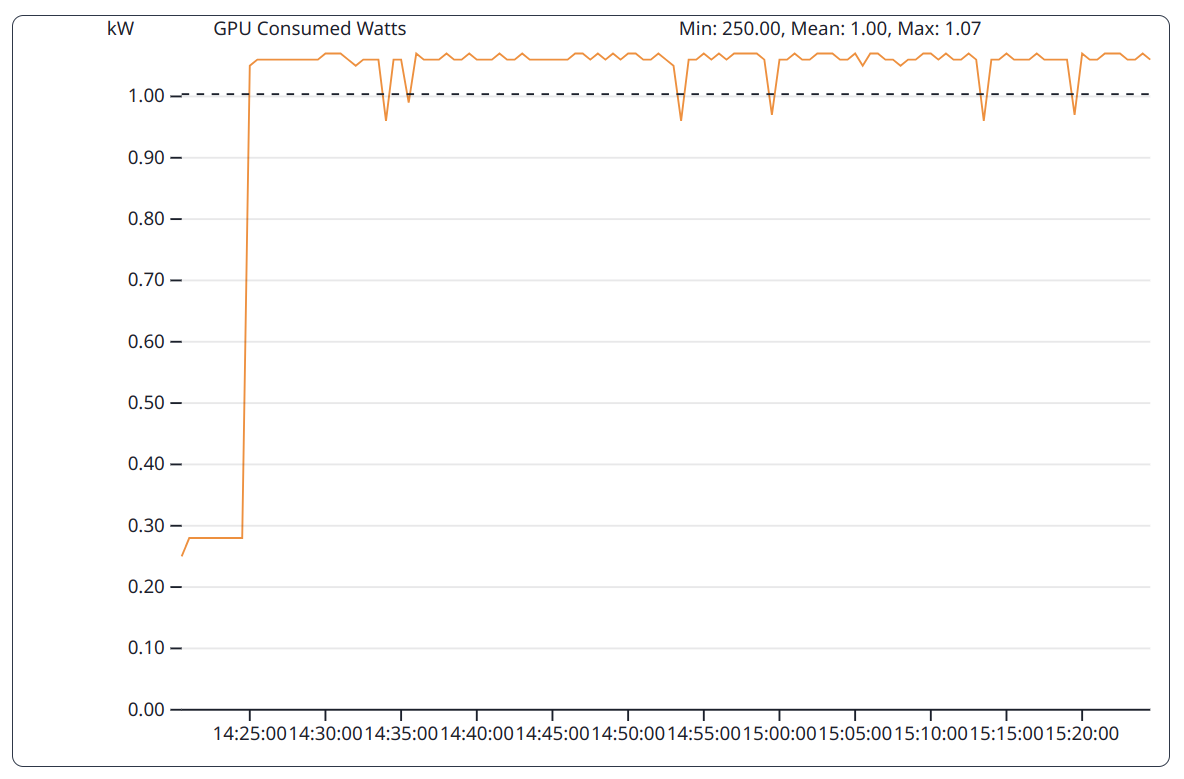

The GPU implementation of the HPCG benchmark has a preparation phase executed on the CPU and a computation phase executed on the GPU. During the first phase the power consumption of the GPUs is therefore lower than in the subsequent phase.

Filesystem¶

This category offers graphs for e.g. meta data operation, IO throughput, ...

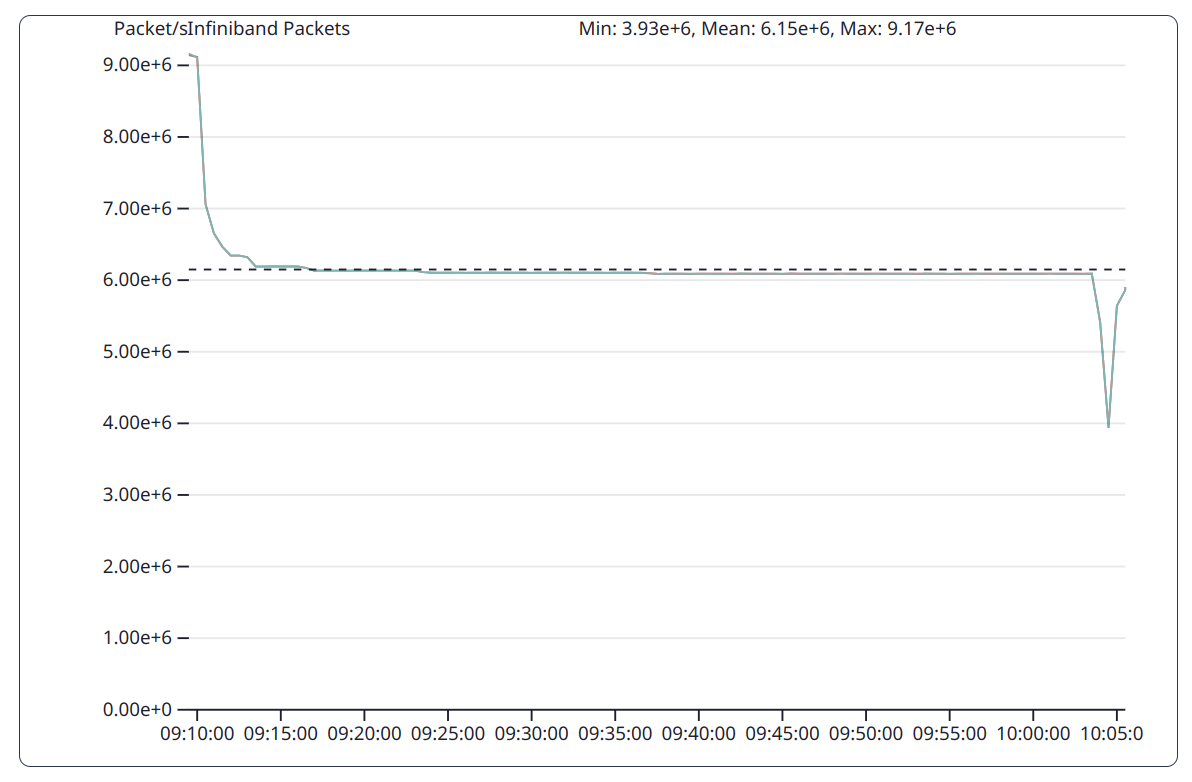

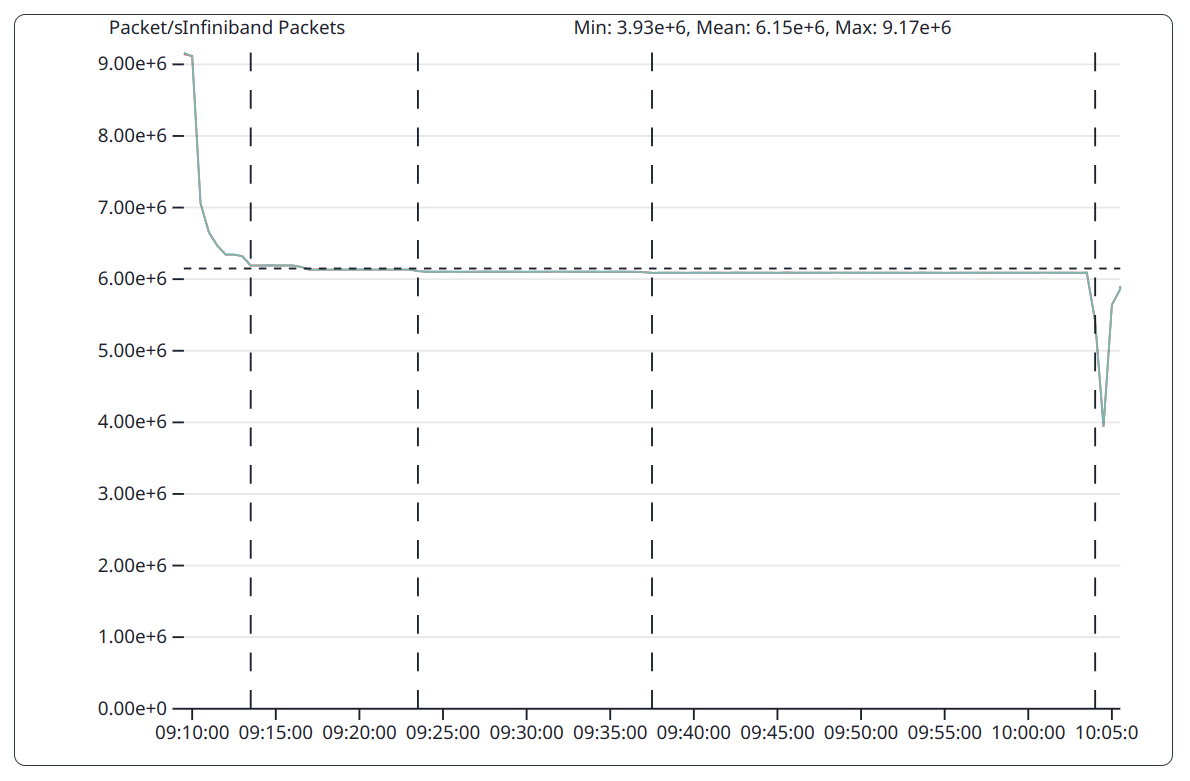

Interconnect¶

This category offers diagrams for:

- InfiniBand: Send, receive and aggregated bandwidth

- InfiniBand: Send, received and aggregated number of packages

Examples: Category Interconnect

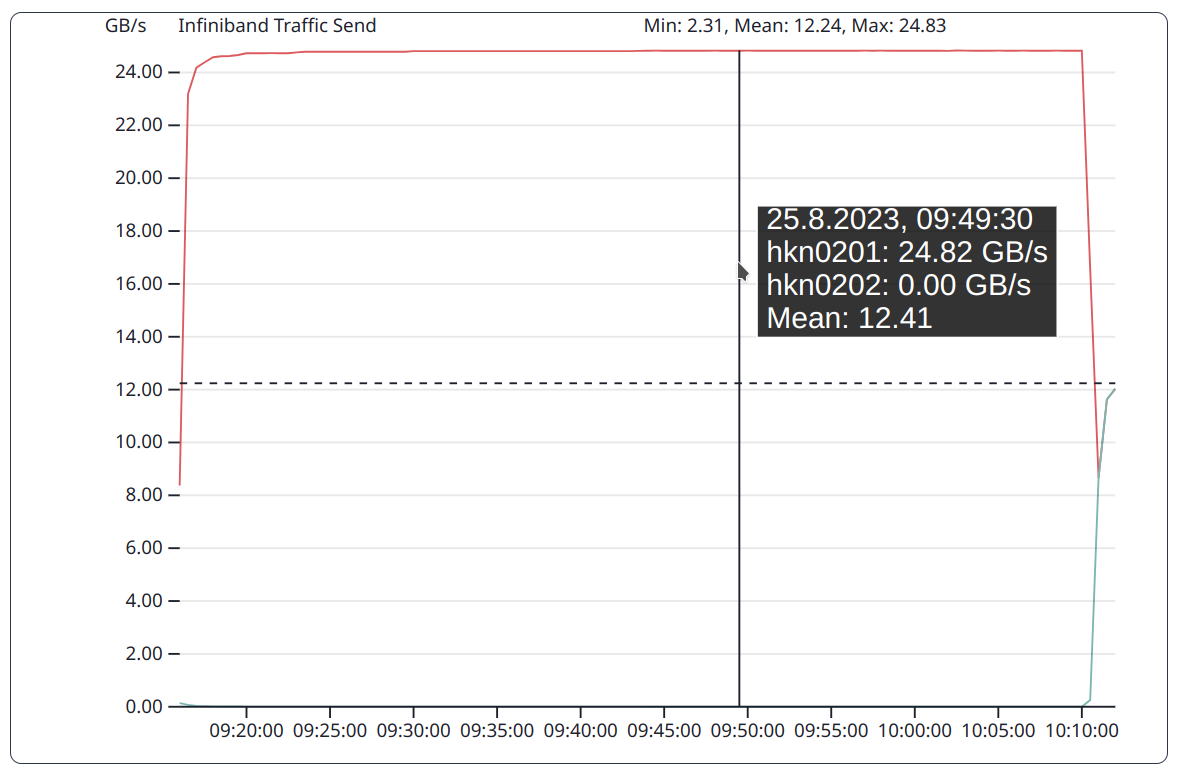

InfiniBand send bandwidth for OSU Micro-Benchmark. In the point to point communication node hkn0201 send data while node hkn0202 only receives data (send bandwidth is zero).

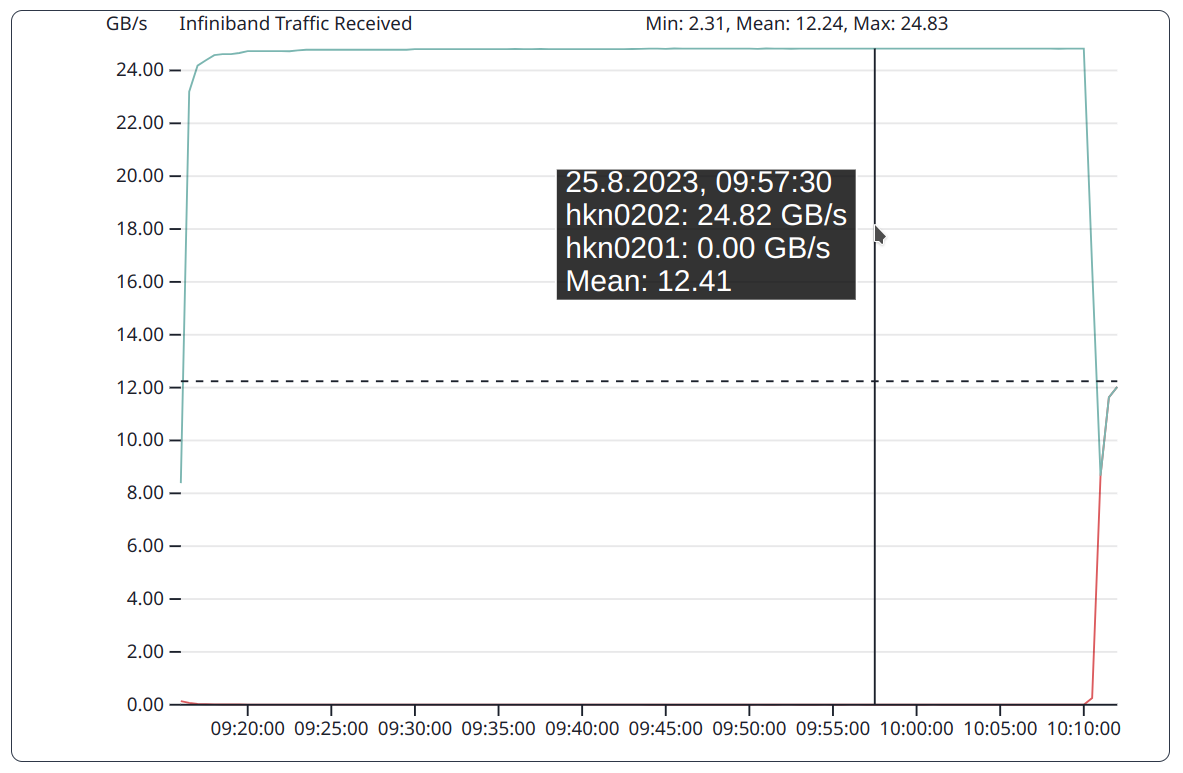

InfiniBand receive bandwidth for OSU Micro-Benchmark. In the point to point communication node hkn0202 receives data while node hkn0201 only sends data (receive bandwidth is zero).

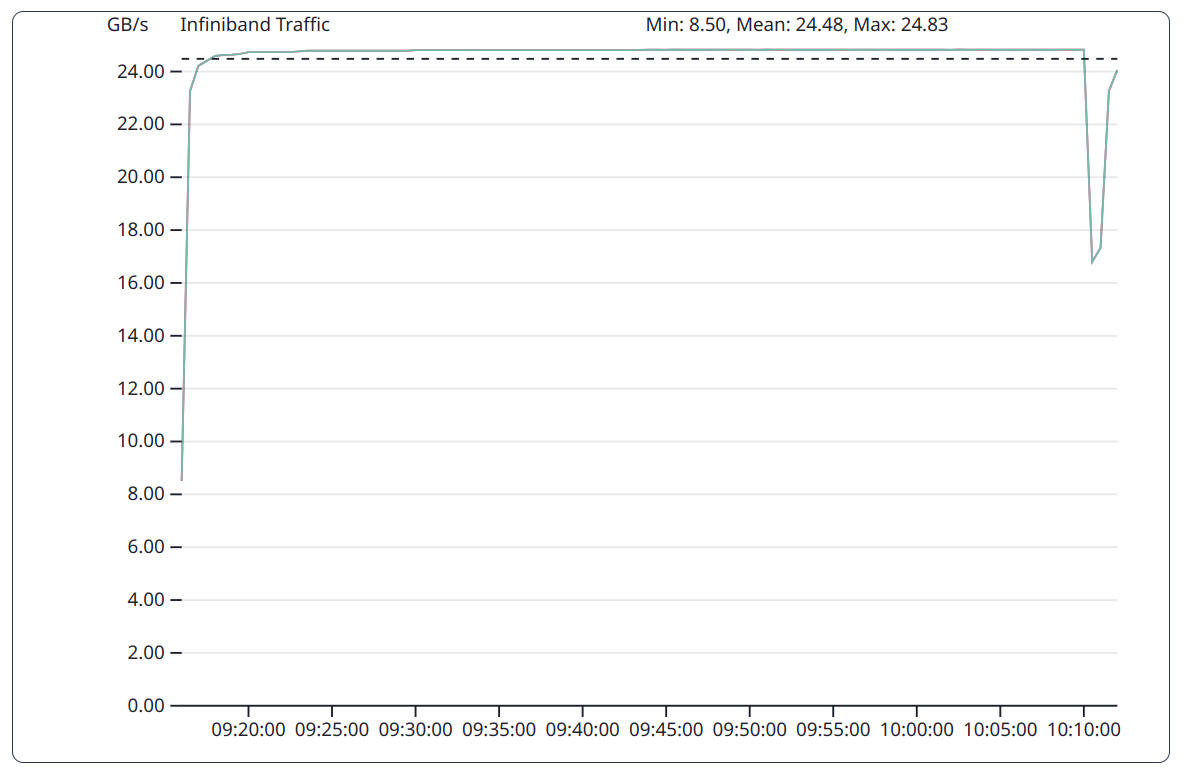

InfiniBand bandwidth for OSU Micro-Benchmark. In the point-to-point communication, both nodes use the same bandwidth, even though one of the nodes only sends data and the other one only receives data.

Memory¶

This category offers diagrams for:

- Amount of memory used on the system (CPU) and on the GPU

- CPU memory bandwidth

- GPU memory utilization (in %) and frequency

Examples: Category Memory

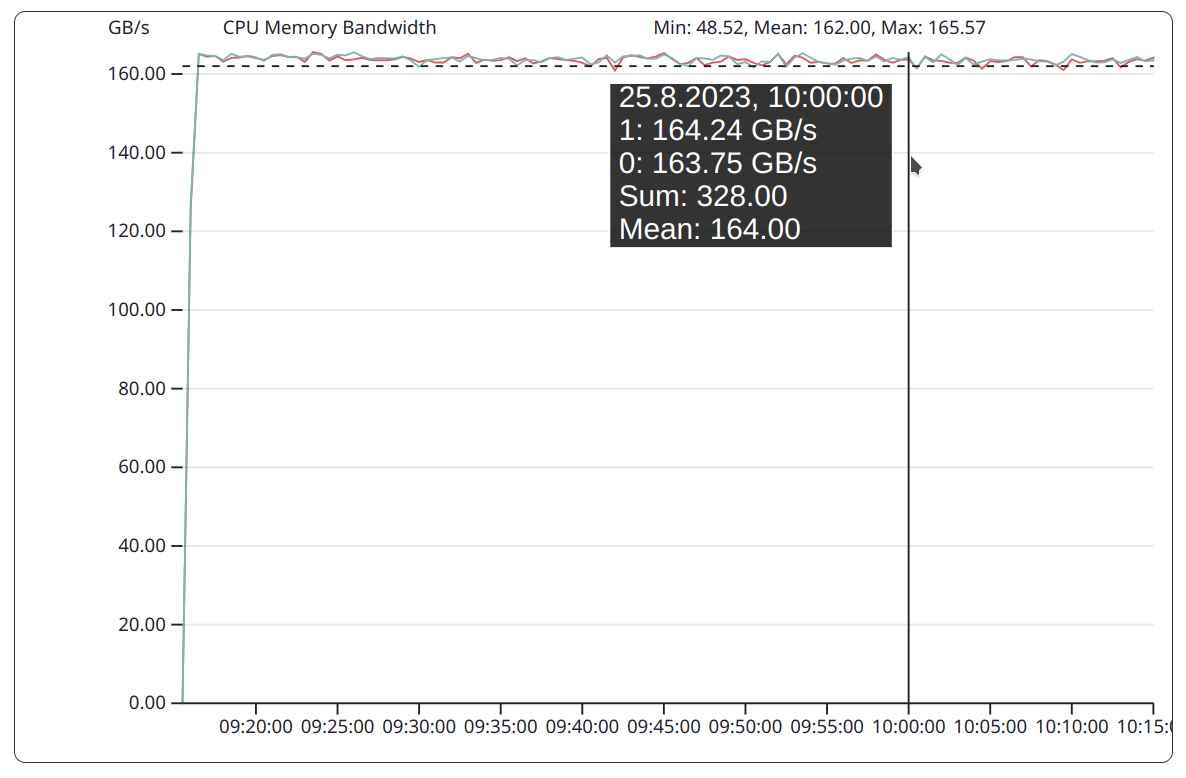

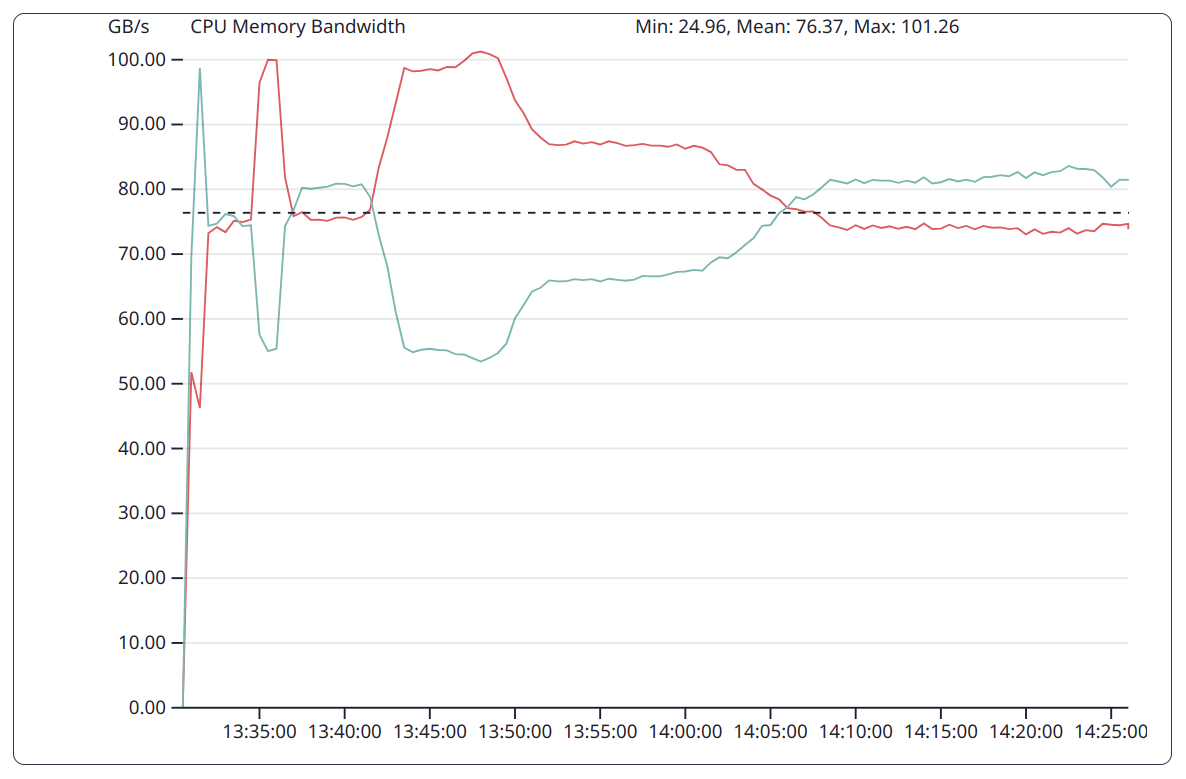

Stream as a memory bound benchmark put constantly high pressure on the memory subsystem.

DGEMM as a compute bound benchmark does put less pressure on the memory subsystem. This is reflected in the varying bandwidth over time.

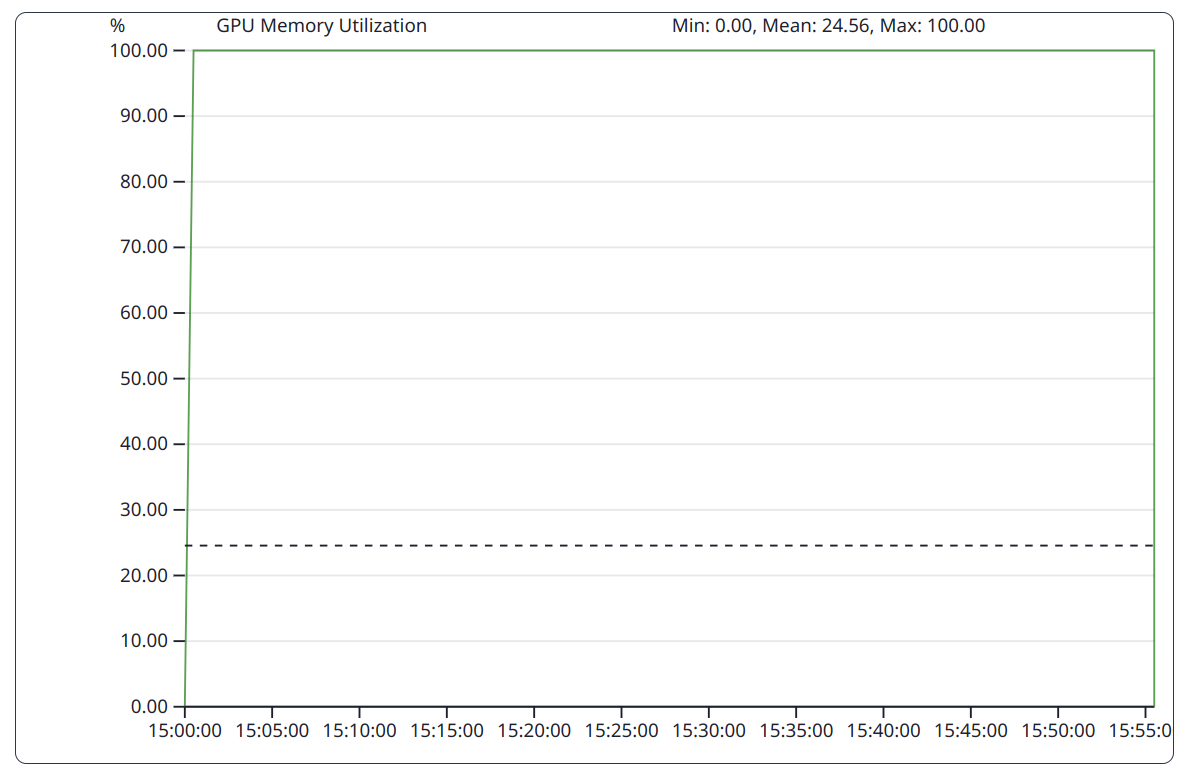

Babelstream as a memory bound benchmark fully utilizes the memory subsystem of the GPU.

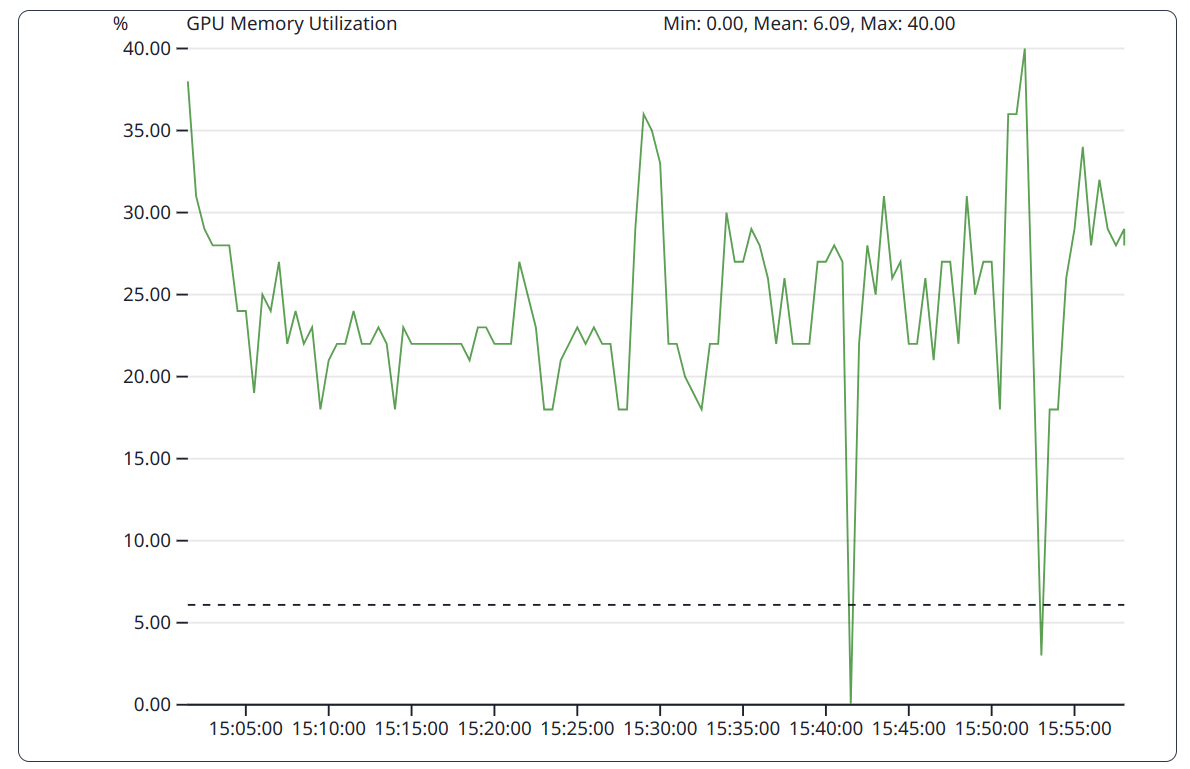

The GPU implementation of DGEMM as a compute bound benchmark does put less pressure on the GPU memory subsystem. This is reflected in the varying utilization over time.

Performance¶

This category offers diagrams for:

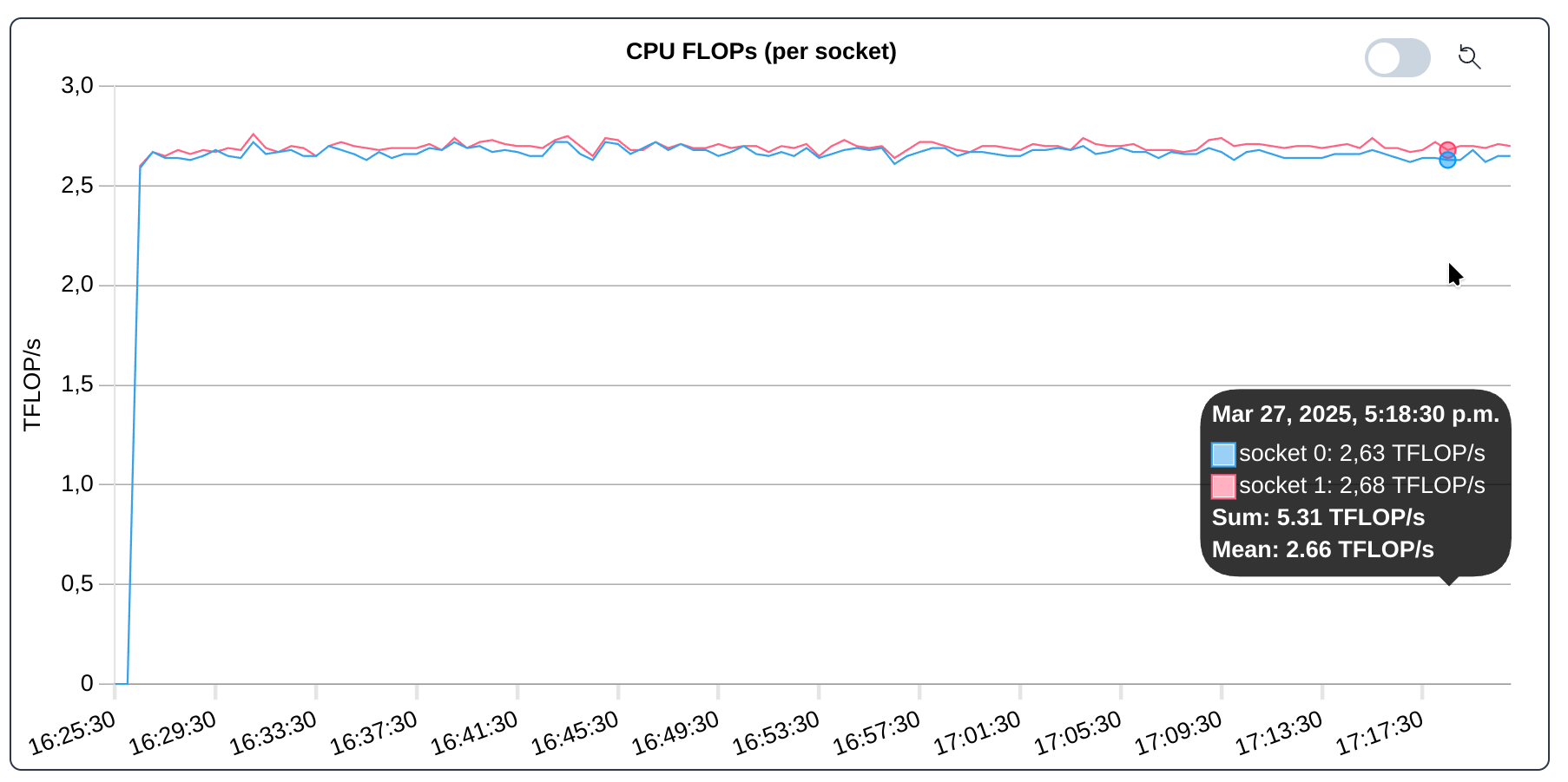

- Floating point operation per second (FLOPs), collected per hardware thread and aggregated per core or per socket

- Instructions per cycle (IPC), collected per hardware thread and aggregated per core or per socket

- CPU time spend in kernel and in user space

- One Minute Linux load average

- GPU utilization

- CPU and GPU frequency

Examples: Category Performance

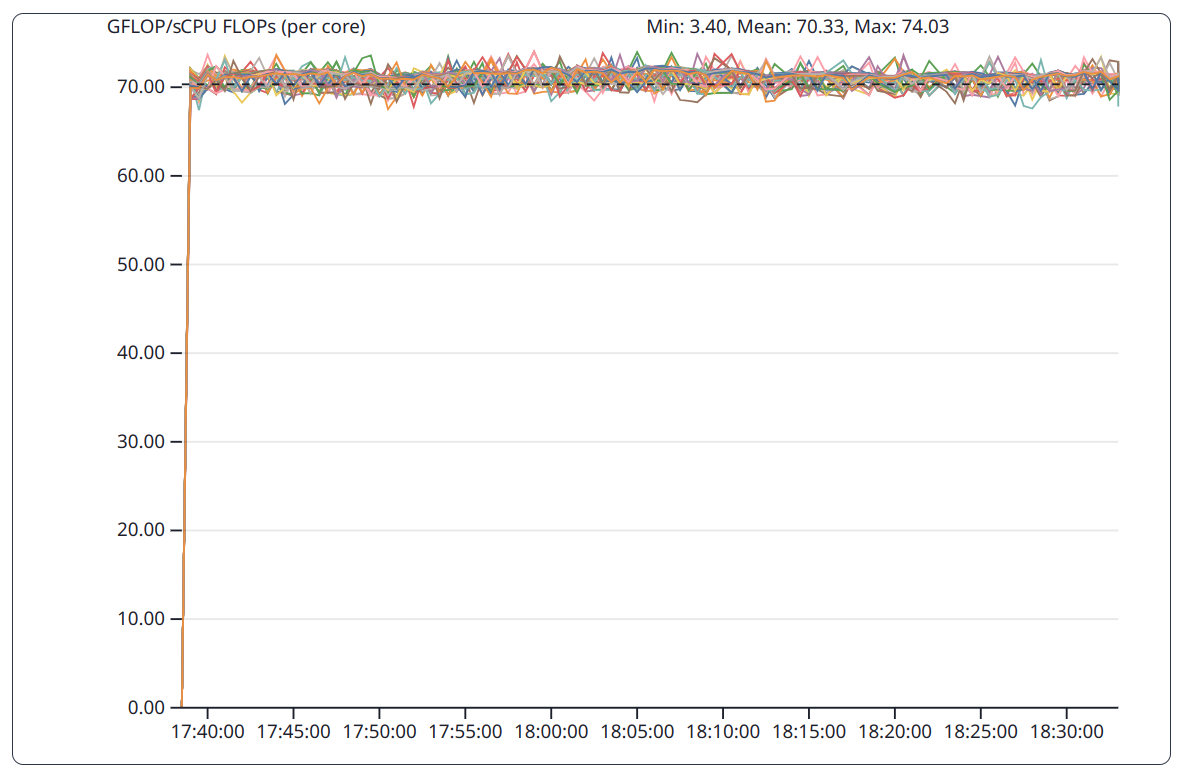

Floating point operation per second (FLOP/s) are collected per hardware thread. As hardware threads of a core share the same compute units it may be more appropriated to aggregated per core.

Floating point operation per second (FLOP/s) are collected per hardware thread. To examine the even utilization of the CPU sockets, an aggregation per socket can be beneficial.

Temperature¶

This category offers diagrams for:

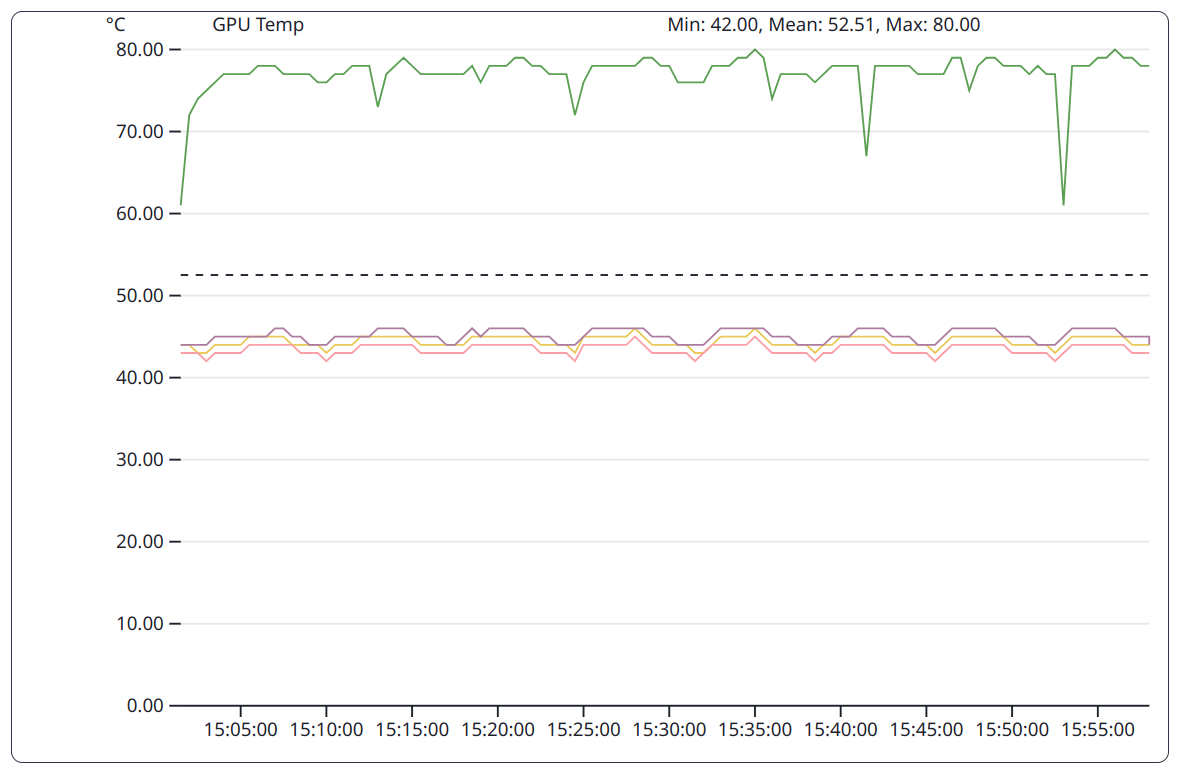

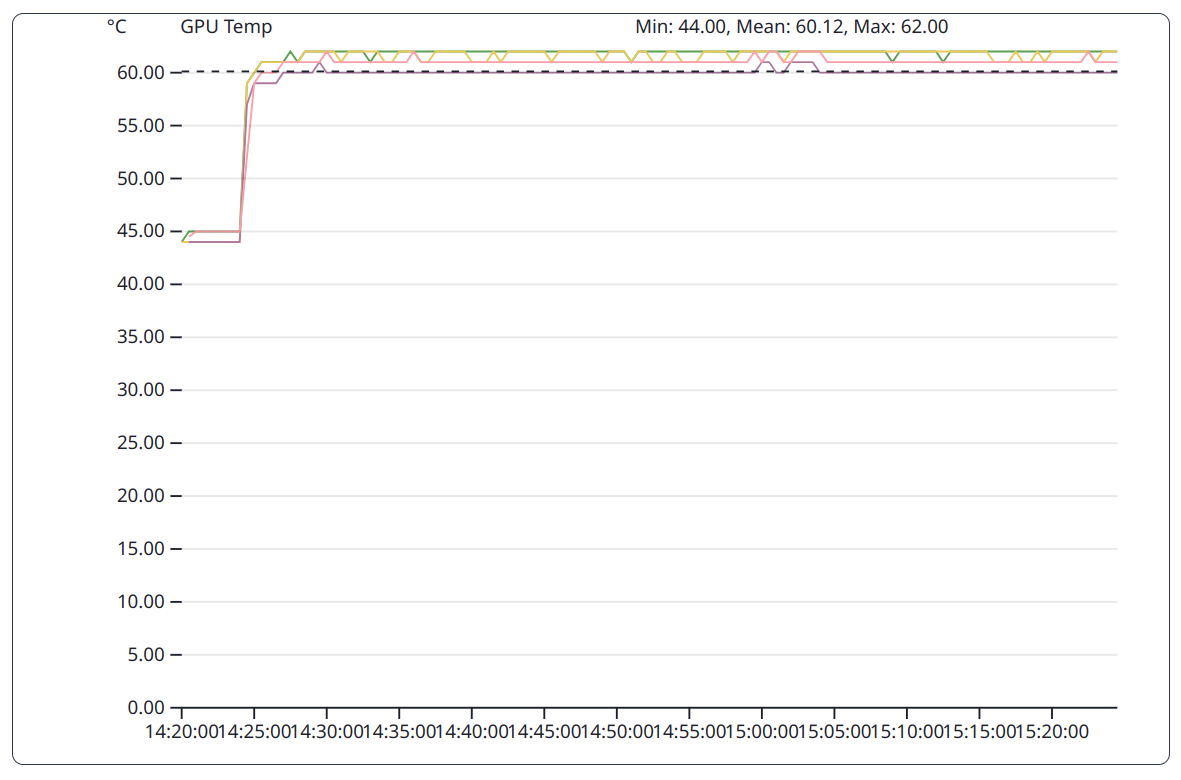

- CPU and GPU temperature

Examples: Category Temperature

The GPU implementation of the DGEMM benchmark only utilizes one of the GPUs. Only this GPU gets hot, while the other GPUs maintain a lower temperature.

The GPU implementation of the HPCG benchmark has a preparation phase executed on the CPU and a computation phase executed on the GPU. During the first phase, the GPU is not utilized and therefore stays cooler than in the subsequent phase.

Additional Features¶

- For multi-node jobs there is a configuration option to select the per-node aggregation function used (e.g. average, sum, maximum)

- Live view of still running jobs

-

Download of metrics as CSV file

- All metrics as measured by the collector

- Ready for use e.g. in spread sheet application or Python

-

Outlook

- For future versions of JobMon it is planned to automatically analyze each job and assign tags for detected characteristics