Hardware Overview¶

HoreKa is a distributed memory parallel computer consisting of hundreds of individual servers called "nodes". Each node has two Intel Xeon processors, at least 256 GB of local memory, local NVMe SSD disks and two high-performance network adapters. All nodes are connected by an extremely fast, low-latency InfiniBand 4X HDR interconnect. In addition two large parallel file systems are connected to HoreKa.

The operating system installed on every node is Red Hat Enterprise Linux (RHEL) 8.x. On top of this operating system, a set of (open source) software components like Slurm has been installed. Some of these components are of special interest to end users and are briefly discussed here. Others are mostly just of importance to system administrators and are thus not covered by this documentation.

The different server systems in HoreKa have different roles and offer different services.

Login Nodes

The login nodes are the only nodes directly accessible to end users. These nodes can be used for interactive logins, file management, software development and interactive pre- and postprocessing. Four nodes are dedicated as login nodes.

Compute Nodes

The vast majority of the nodes (799 out of 813) is dedicated to computations. These nodes are not directly accessible to users, instead the calculations have to be submitted to a so-called batch system. The batch system manages all compute nodes and executes the queued jobs depending on their priority and as soon as the required resources become available. A single job may use hundreds of compute nodes and many thousand CPU cores at once.

Data Mover Nodes

Two nodes are reserved for data transfers between the different HPC file systems and other storage systems.

Administrative Service Nodes

Some nodes provide additional services like resource management, external network connections, monitoring, security etc. These nodes can only be accessed by system administrators.

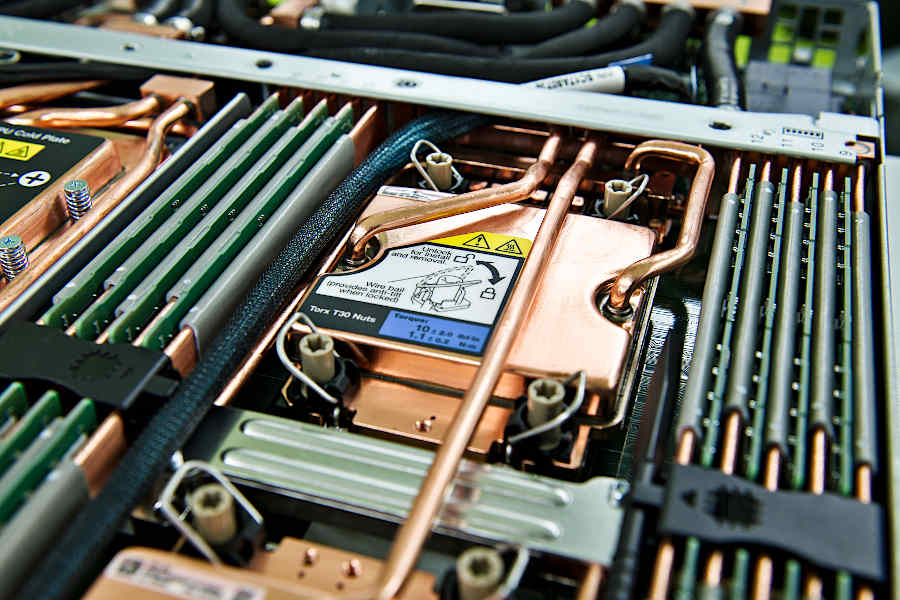

HoreKa compute node hardware¶

| Node Type | Standard / High-Memory / Extra-Large-Memory nodes HoreKa Blue |

Accelerated HoreKa Green |

Accelerated HoreKa Teal |

|---|---|---|---|

| No. of nodes | 570 / 32 / 8 | 167 | 22 |

| CPUs | Intel Xeon Platinum 8368 | Intel Xeon Platinum 8368 | AMD EPYC 9354 |

| CPU Sockets per node | 2 | 2 | 2 |

| CPU Cores per node | 76 | 76 | 64 |

| CPU Threads per node | 152 | 152 | 128 |

| Cache L1 | 64K (per core) | 64K (per core) | 64K (per core) |

| Cache L2 | 1MB (per core) | 1MB (per core) | 1MB (per core) |

| Cache L3 | 57MB (shared, per CPU) | 57MB (shared, per CPU) | 256MB (shared, per CPU) |

| Main memory | 256 GB / 512 GB / 4096 GB | 512 GB | 768 GB |

| Accelerators | - | 4x NVIDIA A100-40 | 4x NVIDIA H100-94 |

| Memory per accelerator | - | 40 GB | 94 GB |

| Local disks | 960 GB NVMe SSD / 960 GB NVMe SSD / 7 x 3,84 TB NVMe SSD |

960 GB NVMe SSD | 2 x 3,84 TB NVMe SSD |

| Interconnect | InfiniBand HDR | InfiniBand HDR | InfiniBand HDR |

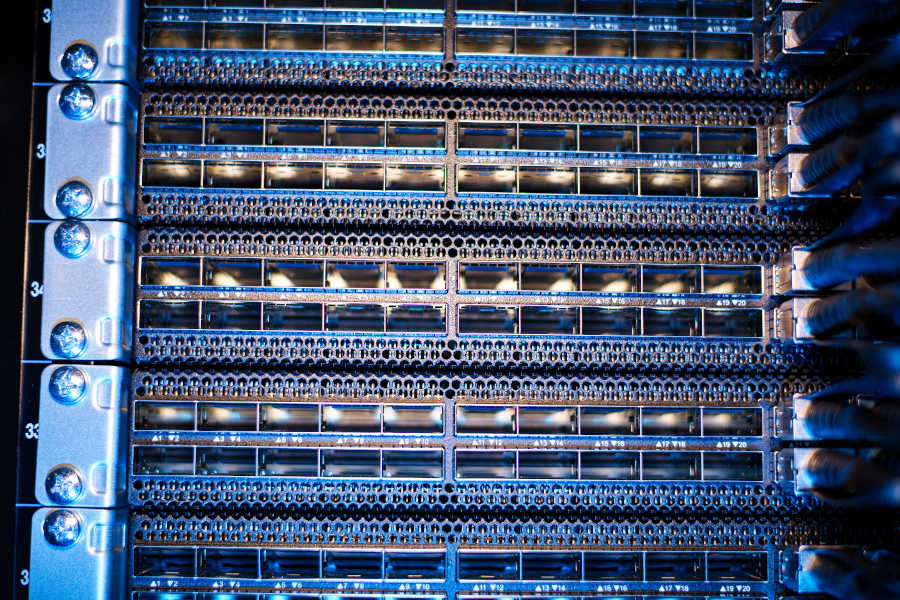

Interconnect¶

An important component of HoreKa is the InfiniBand 4X HDR 200 GBit/s interconnect. All nodes are attached to this high-throughput, very low-latency (~ 1 microsecond) network. InfiniBand is ideal for communication intensive applications and applications that e.g. perform a lot of collective MPI communications.